To 4K Or Not 4K? The Pros & Cons Of Ultra-HD Gaming

Eight million pixels may not be as a good as it sounds

With Laird Towers currently undergoing major renovations, RPS's hardware coverage has been forced to retreat to the vaults. But that hasn't stopped me. No, I've battled through the dust, the rubble, the builders lumbering about the place at ungodly hours of the morning (I regard consciousness before 9:30am as rather uncivilised) and the relentless tea-making to bring you some reflections on 4K gaming. We've covered several interesting alternatives to 4K of late including curved super-wide monitors, high refresh rates, IPS panels and frame synced screens. So does that experience put a new spin on plain old 4K, aka gaming at a resolution of 3,840x2160?

The context here is threefold. The first bit involves the aforementioned floatilla of interesting new screens with fascinating new technology, pretty much all of it gaming relevant. Whether it's 34-inch curved ubertrons, 27-inch IPS panels with 144Hz refresh capability or the Nvidia G-Sync versus AMD FreeSync thang, it's all aimed at PC gamers.

That's right, somebody still cares about PC gamers and if I'm honest it's a little against my expectations of a few years ago when the monitor market had completed the jump to 1080p and was looking rather stagnant. The exception to all this innovation is arguably LCD panel quality itself, which has progressed gradually rather than dramatically. As for the perennial great hope for the future of displays, OLED technology, it's nowhere to be seen.

IPS, high refresh and frame syncing from Asus - is 4K even relevant?

IPS, high refresh and frame syncing from Asus - is 4K even relevant?

The next item on my 4K housekeeping list involves graphics cards. Nothing hammers a GPU like 4K resolutions. It's easy to forget the full implications of 4K resolutions. But even if you remember, I'm going to remind you anyway.

For PCs, 4K typically constitutes 3,840 by 2,160 pixels, which in turns works out at eight million pixels. For reasonably smooth gaming, let's be conservative and say you want to average at least 45 frames per second.

That means your graphics subsystem needs to crank out no fewer than 360 million fully 3D rasterised, vertex shaded, tessellated, bump mapped, textured – whatever – pixels every bleedin' second. And that, ladies and germs, is a huge ask.

Up the ante to 120Hz-plus refresh or flick the switch on anti-aliasing techniques that involve rendering at higher resolutions internally on the GPU and you could very well be staring a billion pixels per second in the face. The humanity.

That dinky little thing on the right is my trusty 30-inch Samsung

That dinky little thing on the right is my trusty 30-inch Samsung

Now it just so happens that I have philosophical-going-on-ideological objections to multi-GPU rendering set ups. Multi-GPU splits opinion, but personally I've always found it unreliable and lacking transparency even when it does work. In short, I find myself constantly wondering whether I'm getting the full multi-GPU Monty and it eventually sends me mad.

Long story short, I'm only interested in 4K gaming on a single GPU. Enter, therefore, the MSI GTX 980Ti GAMING 6G. Handily loaned to me by MSI for a little while, it doesn't come cheap at £550 / $680. But partly thanks to a core clockspeed that's been bumped up by about 15 per cent over the reference clocks, what it does do is give you probably 90 per cent of the gaming grunt of the fastest single GPU on the planet, Nvidia's Titan X, for about two thirds of the cash.

Maxwell to the Max: MSI's 980Ti with some overclocking

Maxwell to the Max: MSI's 980Ti with some overclocking

It's also based on what I think is the best current graphics tech, Nvidia's Maxwell architecture and also has two massive (read, very quiet running) fans that actually shut down when the card is idling. Yay. Anyway, as things stand, if any graphics card can deliver a workable single-GPU 4K gaming experience, it's this MSI beast.

The final part of my 4K background check involves the screen itself. I am absolutely positive about this bit. If you're going to go 4K two things are critical. First it needs to be have a refresh rate of at least 60Hz, though the fact that 60Hz is currently as high as 4K goes remains a possible deal-breaker, more on which in a moment. Second, it needs to be huge.

Loads of full-sized DisplayPort sockets. Yay

Loads of full-sized DisplayPort sockets. Yay

Specifically, it needs to be huge because otherwise you lose the impact of all those pixels at normal viewing distances, and because to this day Windows operating systems are crap at scaling, so super-fine pixel pitches are problematic for everything non-gaming. How huge? I wouldn't want less than 40 inches for 4K.

All of which means right now, you're looking at just one monitor, at least as far as I am aware. That is the 40-inch Philips BDM4065UC. So, I've got one of those in too. Huzzah. And doesn't it make my trusty Samsung XL30 30 incher look positively puny?

Even at around £600 / $900, I reckon the Philips is fantastic value. It's not perfect. In fact it's far from perfect. But my god, it's a thing to behold.

So there you have it, 4K done as well as it currently gets on the PC courtesy of Philips and MSI and arguably at a not absolutely insane price, depending of course on your own means. So what's it actually like?

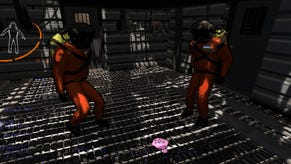

Sheer man power allowed the Romans to achieve 4K in 132 A.D. Probably...

Sheer man power allowed the Romans to achieve 4K in 132 A.D. Probably...

The first thing you have to do is get over the slight borkiness of the Philips monitor. I'm not totally sure if it's a pure viewing angle thing or also involves the challenges of backlighting such a massive screen. But the apparent brightness and vibrancy falls off towards the bottom and especially in the bottom corners, especially at normal viewing distances. Viewed at an HDTV-ish 10 feet, the problem disappears. Three or four feet from your nose and it's all too obvious.

The other issue is pixel response. With the pixel overdrive option at either of the top fastest settings, the inverse ghosting (as detailed here)is catastrophic. At the lowest setting, it's merely a bit annoying. You can also turn it off altogether. And then the panel is just a bit slow.

Oh and the stand isn't adjustable. At all. But let's turn to the biggest questions of all – frame rates and quality settings.

For the meat of this discussion, I'm going to use Witcher III. Why? Because it's right on the cusp of playability at 4K and that's handy for framing the broader debate. There are more demanding games, for instance Metro: Last Light, some of which are probably not truly goers at 4K. There are less demanding games, like the GRID racing series, which will fly at 4K. Witcher III is somewhere in the middle and serves as a handy hook on which to hang all this, nothing more.

Everything set to full reheat...

Everything set to full reheat...

Is Witcher III any good as a game? Who cares, it looks ruddy glorious at 4K native and ultra settings. And yes, it is playable on the MSI 980Ti, even with anti-aliasing enabled.

In fact, toggling AA doesn't make much difference to the frame rate which is either good because it means you may as well have it on or bad when you consider that the MSI card is knocking out marginal frame rates in the mid 30's.

Actually the frame rate itself is tolerable and it's not the numbers that really matter. It's the feel. The possible deal breaker for some will be the substantially increased input lag when running at 4K.

4K with AA above, 4K with no AA below...

4K with AA above, 4K with no AA below...

Crush the settings to low quality and the frame rate doesn't leap as you might expect. It only steps up to the mid 40s. The lag remains. So you lose a lot of visual detail for relatively little upside.

However, bump the resolution down to 1440P (i.e. 2560x1440) and the 980Ti shows its muscle. It'll stay locked at 60fps with V-sync enabled at ultra quality settings. And there's no more lag. Which then begs the question of how non-native looks on a 4K panel.

In isolation you'd probably say it looks pretty darn good interpolated. Indeed, You might not immediately spot it was interpolated if you hadn't been told, though you'd probably pick it if asked to make the call. But then you go back to 4K and the clarity and the detail is simply spectacular.

So these are the kinds of conundrums you're currently going to face at 4K. To be clear, you'll get a lot of variation from game to game. As another for instance Total War: Rome II runs pretty nicely maxxed out at 4K. Notably it runs lag free and looks fantabulous.

That said, running native for a lot of online shooters is probably a non starter. For everything else, it all depends on personal preference.

The sheer scale and majesty of 4K at 40 inches is truly special, one of the wonders of the modern computing age. But the 34-inch super-wide panels do the magisterial vistas thing seriously well, too, and give single-GPU setups that critical bit of breathing room.

What happened to my fancy threads? 4K ultra quality above, 4K low quality below...

What happened to my fancy threads? 4K ultra quality above, 4K low quality below...

Meanwhile, as I 4K-gamed the evening away I was reminded of the bad old days of PC gaming when you were constantly playing off quality settings versus resolution. More recently, with a top-end GPU the usual drill involved maxxing everything out without a worry in most games. That's very liberating. With 4K, the settings stress returns.

Then there's the whole high refresh and adaptive refresh thing. There's no getting round it. If you are familiar with those technologies, you'll miss them here. Then there are the basic image quality flaws that come with this particular 4K panel – slight response and viewing angle borkiness, basically.

Very long story short, I'm afraid I don't have any easy answers. You pays your money, you takes your choice. Personally I don't even know what I prefer between 4K, high refresh and fulsome adaptive-synced frame rates right now, so I can hardly tell you what to go for. But if a single issue was likely to swing it, then fears of frequent input lag might be the 4K deal breaker for me. I really hate input lag.