2016 Will Be Great For Gamers: Part 1, Graphics

Twice the performance for the same cost - in theoy

Four long, desolate years. Yup, it really was 1,460 sleeps ago, almost to the day, that the very first 28 nanometer graphics chip was launched, allowing card-makers to squeeze billions more transistors into their GPUs - meaning better performance for theoretically lower costs as a result. But here we are and 28nm is still as good as it gets for PC graphics. That's a bummer, because it has meant AMD and Nvidia have struggled to improve graphics performance without adding a load of cost. It's just one reason why 2015 has kind of sucked for PC gaming hardware. But do not despair. 2016 is going to be different.

In fact, it's not just graphics that's getting a long overdue proverbial to the nether regions. Next year is almost definitely going to be the best year for PC gaming hardware, full stop, for a very long time. So strap in for what is merely part one of my guide to the awesomeness that will be 2016.

NB: For instant gratification you can find the usual TL;DR shizzle at the bottom.

The 28nm problem

The problem with PC graphics has a name and its name is TSMC. That's the Taiwanese outfit which, as we speak, knocks out all the high performance graphics chips you can buy. AMD and Nvidia design 'em. TSMC bangs 'em out. We play games.

In theory, TSMC should wheel out a new production process every couple of years at worst. In simple terms, that means the ability to make chips composed of ever smaller components, mainly transistors. Which is good for a number of reasons. With smaller transistors, you can pack more of them into a given chip size. More transistors in a given chip size means more performance for the same money. Or you can make smaller chips that maintain performance but cost less. It's all good and the basis upon which our PCs keep getting faster and cheaper.

Smaller transistors also tend to allow for better power efficiency and / or higher clockspeeds, the benefits of which I shall trust you to tabulate on your own.

Anyway, TSMC hasn't exactly been nailing its roadmap of late. Prior to the current logjam, it got a bit stuck on 40nm. So, it binned the planned jump to 32nm for graphics chips, said sod everything, and went straight to 28nm. Gillette-stylee but probably without the corporate triumphalism, you might say.

I digress. The same thing has happened again. Only this time it's been even worse, taken even longer and the jump will be from the existing 28nm node to 16nm.

So, there you have it. Next year's graphics chips will finally get a new process node and we can expect a really dramatic leap in performance across the whole market, right? Affirmative. But as ever it's a bit more complicated than that.

No more new but not really new boards like AMD's 390X in 2016

No more new but not really new boards like AMD's 390X in 2016

FinFET fun

For starters, Nvidia will indeed have its next family of graphics chips made by TSMC at 16nm. But not AMD. For the first time, it's going to hand its high performance graphics chips over to the company that makes its CPUs and APUs, namely Global Foundaries, or GloFo for short.

GloFo, of course, used to be part of AMD before it was spun off during one of the latter's regular cash haemorrhaging fits, sorry, carefully managed rounds of restructuring. And GloFo's competing process will be 14nm, not 16nm. When you're talking about processes at different manufacturers they're not always directly comparable. So let's just say they're in the same ballpark.

The other interesting bit is that both processes will sport what's known as FinFET tech. This is somewhat complicated and involves the normally flat channels inside transistors extending upwards (hence 'fin') into the gate and allowing for much better control of channel inversion and in turn conductivity from source to drain.

I know, tremendous. But seriously, think of FinFETs as 3D transistors that are more efficient and as offsetting some of the problems that pop up as these things shrink ever smaller. The critical point is that the FinFET thing means that this isn't just a straight die shrink from 28nm to 16nm or 14nm. It's supposedly going to be even better than that.

This somewhat silly water-cooled AMD Fury contraption is what happens when you get stuck on 28nm

This somewhat silly water-cooled AMD Fury contraption is what happens when you get stuck on 28nm

Of course, they always say that. Wasn't it strained silicon that made the last node or three super special? Or was that SOI? No, sorry, it was high-k metal gate. Wasn't it? Whatever.

The big question mark in all this is arguably GloFo. It hasn't made big graphics chips for AMD before. But that's a story for another day. Let's just cross our fingers for now because if GloFo cocks it up, we're all buggered.

Those next-gen cards in full

What we do know is that the manufacturing side should allow for much, much more complex GPUs. In fact, I'll tell you how much more. Roughly twice as much more. Yup, today's top-end GPUs are around the 8-9 billion transistor mark and these next-gen puppies due out in 2016 are going to take that to at least 15 billion and beyond at the high end. I cannot over emphasise just how staggeringly, bewilderingly big these numbers are. This stuff really is a marvel of modern science and engineering.

AMD's new family of chips will be known as Artic Islands and the big 15+ billion transistors bugger is codenamed Greenland. Nvidia's lot is Pascal and the daddy is GP100, which is rumoured at around 17 billion transistors. Monsters, both, in other words.

4K gaming on a single somewhat affordable GPU in 2016? The affordable bit might be a stretch...

4K gaming on a single somewhat affordable GPU in 2016? The affordable bit might be a stretch...

High bandwidth memory comes good

As if that wasn't enough, both are going to be using the second gen of that HBM or High Bandwidth Memory gunk that AMD used on its Fury boards this year. HBM delivers (unsurprisingly, given its name) loads of bandwidth. But the first generation only allowed for 4GB of graphics memory. That sucked but HBM2 takes that to 32GB, which I think you'll agree sounds like plenty. Actually, the high end may well top out at 16GB, but the point is that HBM2 doesn't have a size problem.

Either way, we're looking at roughly 1TB/s of graphics memory throughput, which technically is known as '1CT' or 'a craptonne of bandwidth'. Of course, there will be architectural improvements, too. Nvidia is arguably ahead of the curve here with its uber-efficient Maxwell chips. But AMD will be making its first really major revision to the GCN tech that's been powering its graphics chips for the last four years. GCN 2.0? Most likely.

All told, the net result could well be something silly like 80 per cent more performance. Graphics cards that are suddenly twice as fast as before, in other words.

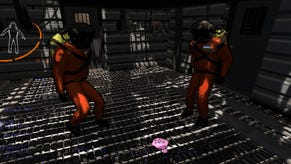

If VR is to be the next big thing, we're going to need much more powerful GPUs...

If VR is to be the next big thing, we're going to need much more powerful GPUs...

This isn't just a high end story, either. The impact of this will extend across the market. Maybe not from day one. But as the whole range of 14/16nm GPUs appears with chips like Nvidia's GP104 and AMD's Baffin, we could be looking at not far off double the performance at any given price point by year's end. So budget cards could mean big performance.

This kind of generational performance leap has happened before. But the long wait is going to make it especially satisfying this time around.. As for exactly when this will all kick off, it's not certain. But we should see the first launches by next summer. With all that in mind, 2016 in PC graphics will look like this:

TL;DR

- PC graphics finally moves away from ancient 28nm transistors in 2016

- HBM2 memory will give an insane 1TB/s of bandwidth and support 32GB of graphics memory

- Overall upshot could be a near doubling of gaming graphics performance...

- ...or today's performance at roughly half the cost

- Proper 4K gaming on a single GPU should be possible

- GPUs with enough performance for really high resolution virtual reality (VR) look likely, too

- Nvidia is sticking with TSMC for manufacturing, but question marks remain over AMD's new graphics chip partner, GloFo

Overall, then, things look very fine for PC graphics next year. And yet that's just the beginning. 2016 is looking hot in a lot of other areas too. Like CPUs. And SSDs. And displays. And stuff I can't be arsed to think of right now but hopefully will cross my mind in time. So tune in next time for a whole lot more awesome.