What is HDR and how can I get it on PC?

Our HDR guide has it all

If you've thought about buying a new TV in the last couple of years, you'll no doubt have been bombarded with the glorious three-letter acronym that is HDR. Standing for 'High Dynamic Range', HDR is a type of display technology that's all about making the things you see onscreen more realistic and closer to how they'd look in everyday life. That means improved contrast, a wider range of more accurate colours and increased luminosity - and it's finally arrived on PC.

HDR on PC is a bit more complicated than it is over in the world of TVs, however, and a variety of competing standards, compatibility issues and the general fussiness of Windows 10 can make it quite a messy thing to try and untangle. In this article, I'll be taking you through what HDR actually is and does, why it makes your games look better, as well as the graphics cards, monitors, and PC games you need in order to take advantage of it.

What is HDR?

Forget 4K. HDR is, for my money, the most exciting thing to happen in the world of displays since we stopped having to twiddle tiny little screws round the end of our monitor cables to get them to stay in place. As mentioned above, HDR is all about producing a more natural, life-like image through the use of brighter whites, darker blacks and a boatload more colours in-between than what we're currently used to seeing on an SDR (or standard dynamic range) display.

The most important thing about HDR is that increased brightness. Without it, you lose that sense of contrast or 'dynamic range' that's so vital to the way our eyes generally perceive the world around us. If you've ever taken a photo on a cloudy day and the sky's come out all white, even though you yourself can clearly see several different shades of grey up there, it's because your camera lens can't interpret that amount of light data in the same way your own eyeballs can.

The same thing happens on SDR TVs and monitors. HDR aims to rectify this, showing you images onscreen that are as close as possible to how you'd see them in real life. That means better skies and horizons that are full of vivid and vibrant colour detail instead of huge swathes of white, and a more accurate gradation from dark to light when you move into murky pools of shadow.

Below, you'll discover how HDR actually achieves all this, but if you want to skip straight to the good stuff about HDR-compatible graphics cards and PC games, then click this lovely link here: What graphics card do I need for HDR and what PC games support it?

How does HDR affect brightness?

The overall brightness of a display largely depends on the complexity of its backlight. In an LCD display, the backlight is the bit of the monitor that illuminates the pixels in front of it in order to show colour, and is usually comprised of LEDs, which is why you often see TVs referred to as LCD LED TVs. These days, most monitors are 'edge-lit' by backlights that run down either side of the display.

The very best TVs, however, have largely ditched the edge-lit method for lots of little backlight zones arranged in a grid. TV bods often refer to this as 'full array local dimming', as it enables a TV to more accurately respond to what's onscreen, lowering the brightness in darker parts of the image in order for brighter ones to really come to the fore, such as street lamps and stars in the night sky. The more backlight zones a display has, the more accurately it can depict what's happening onscreen while maintaining that all-important sense of contrast and dynamic range.

The good news is that we're finally starting to see PC monitors do this as well. The Asus ROG Swift PG27UQ and Acer Predator X27 both have 384 backlight zones to their name, and the ludicrously wide Samsung CHG90 has them too - although exactly how many is currently only known to Samsung's engineers.

Having lots of backlight zones is all well and good, but the key thing you need to watch out for is how bright they can actually go. Again, taking TVs as a starting point, the best (and currently identified via the Ultra HD Premium logo) can hit a whopping 1000cd/m2 (or nits, as some people say). Your average SDR TV, meanwhile, probably has a max brightness somewhere in the region of 400-500cd/m2. SDR Monitors, meanwhile, don't usually go much higher than 200-300cd/m2.

This is where things get a bit complicated, as I've seen several monitors claim they're HDR without getting anywhere near this kind of brightness intensity.

The current front-runners - the Asus PG27UQ and Acer Predator X27 - are targeting 1000cd/m2 like their Ultra HD Premium TV rivals. This is what Nvidia are also going after with their G-Sync HDR standard (of which the PG27UQ and X27 are a part of), and it's also one of VESA's DisplayHDR standards as well, helpfully dubbed VESA DisplayHDR 1000.

However, VESA also have HDR standards further down the spectrum for 600cd/m2 and 400cd/m2 brightness levels - VESA DisplayHDR 600 and VESA DisplayHDR 400. These will likely become the most widely-used standards in PC land, and I've already started seeing several monitors from the likes of AOC and Philips starting to adopt them.

Having a lower max brightness level, however, will naturally lessen the overall impact of HDR, and given that most SDR PC monitors can already hit around 300cd/m2, I'd argue that an extra 100cd/m2 isn't going to make a huge amount of difference.

As such, if you're considering getting an HDR monitor, I'd strongly advise going for one that, ideally, has a peak brightness of 1000cd/m2, or 600cd/m2 at the very minimum. Otherwise, you're not really getting that much of an upgrade. These will naturally be more expensive than 400cd/m2 monitors, but if you really want to experience the full benefit of HDR, then you'll be doing yourself a disservice with anything less.

How does HDR affect colour?

Of course, HDR isn't just about increased brightness. It's also about displaying a wider number of colours for that enhanced sense of realism. In many ways, brightness and colour quality are very much tied together, as due to a weird quirk in how our eyes work, the brighter something is, the more colourful we perceive it to be.

Taking brightness out of the equation for a moment, though, there are two main ingredients HDR needs to achieve its enhanced colour. The first relates to the panel's bit-depth, and the second is to do with the range of colours it's able to display - which is where all that talk about colour gamuts comes in. (I'm going to warn you now - there will be some GRAPHS in a minute to illustrate this, so be prepared for lots of bright colours).

For example, for a TV to be classified as an Ultra HD Premium TV, it must have a 10-bit colour panel and be able to hit at least 90% of the DCI-P3 colour gamut.

Let's tackle bit-depth first. Without getting too bogged down in too much technical detail, the most important thing you need to know is that most displays these days use 8-bit panels that can display 16.78 million colours (you may also see this referred to as 24-bit colour or True Colour). Not bad, you might think, until you realise that 10-bit panels (also sometimes called 30-bit colour or Deep Colour) can display a massive 1.07 billion of them.

This is largely where PC monitors have been lagging behind. Even Nvidia have admitted that their G-Sync HDR panels on the Asus ROG Swift PG27UQ and Acer Predator X27 are technically only 8-bit panels that simulate 10-bit quality by means of a process called '2-bit dithering' rather than being proper 10-bit, and you'll find a similar situation over at VESA's DisplayHDR standard as well. Here, VESA says a display only needs to have a true 8-bit panel (6-bit + 2-bit dithering that cheaper displays sometimes use isn't good enough here) to earn itself one of their HDR monitor badges. If you're an 8-bit + 2-bit, then you're doing even better.

Personally, I'm not too worried about the 8-bit + 2-bit situation. Having seen the Asus PG27UQ up close, it's still pretty damn convincing and easily the equal of any Ultra HD Premium TV out there. Still, even if PC monitors aren't quite at true 10-bit yet, an 8-bit + 2-bit panel will still likely be better than one that's just simply 8-bit, making it another thing to watch out for when you eventually come to buying one.

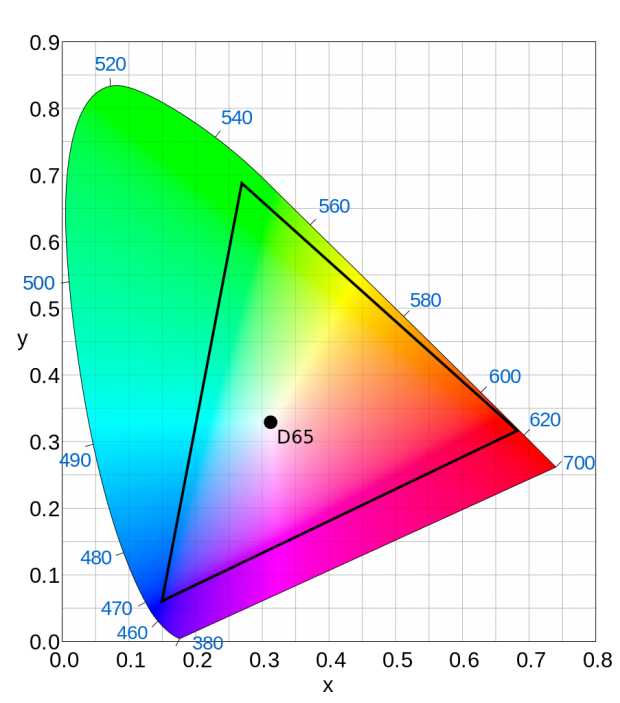

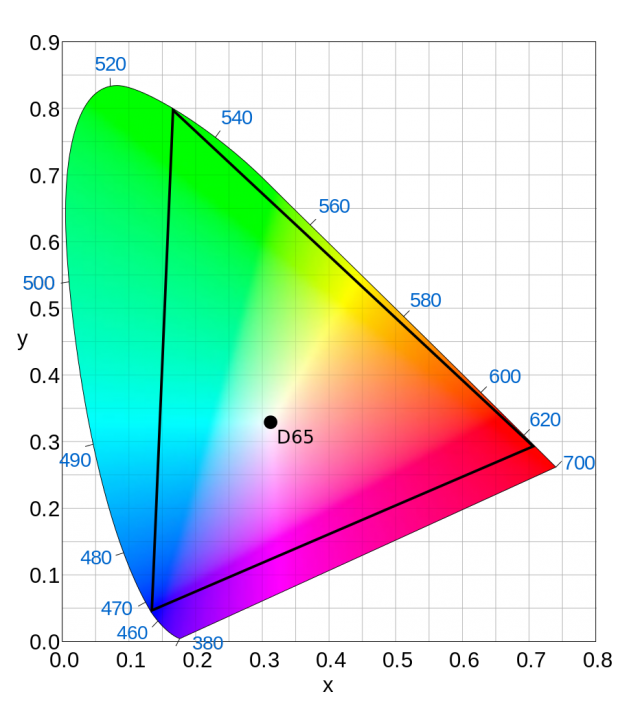

Indeed, the number of colours a monitor is able to produce will naturally have a knock-on effect to its overall gamut coverage. Most of SDR displays are configured to show colours in what's known as the sRGB colour gamut. This is a small portion (around 33%) of all the known colours our eyes can physically perceive, and largely resembles the number of colours visible in nature - what's known as Pointer's gamut, which in itself represents just under half of all known colours (or chromaticities) in the overall colour spectrum.

Until now, sRGB has been absolutely fine for everyday computing. It was, after all, created by Microsoft and HP in the mid 90s for that very purpose. But HDR displays are now targeting a much wider colour gamut known as DCI-P3. Covering around 45% of all known colours and a much larger chunk of Pointer's gamut (87% as opposed to sRGB's 69%), this standard was created by several of today's top film studios for digital cinema (DCI actually stands for Digital Cinema Initiatives).

The main advantage DCI-P3 brings over sRGB is its extended red and green coverage, which is important because these are the colours our eyes are most sensitive to under normal lighting conditions. Its farthest blue point is actually exactly the same as what you'll find in sRGB, as this is the colour we're least sensitive to.

As mentioned above, the very best TVs must cover at least 90% of the DCI-P3 colour gamut, and this is largely being adopted by PC monitors as well - both by Nvidia's G-Sync HDR specification and VESA's DisplayHDR standards.

You may have also heard the phrase Rec. 2020 being bandied about as well. This refers to (among other things) an even wider colour gamut than DCI-P3 - around 63% of all known colours and 99.9% of Pointer's gamut - and is also included as part of the minimum specification for Ultra HD Premium TVs.

However, while many Ultra HD Premium TVs support the Rec. 2020 standard, that doesn't necessarily mean they can actually display it. Indeed, at the moment, there is currently no known display - certainly not one that's available to buy, at any rate - that's capable of producing anything close to the full Rec. 2020 colour space and will likely require the arrival of 12-bit panels in order to do so properly.

As such, it will be a number of years yet before we need to start worrying about Rec. 2020, and any support included in stuff today is really only concerned with trying to help future-proof things further down the line. Really, the only thing you need to focus on right now is how well a monitor can display the DCI-P3 gamut.

What about AMD FreeSync 2 HDR?

Just to confuse things further, AMD are also throwing their hat into the HDR ring with their own standard: FreeSync 2. Much like Nvidia's G-Sync HDR, this is an extension of AMD's already-existing adaptive frame rate technology, FreeSync, which ensures low latency and adapts your monitor's refresh rate in real time to match the frames being spat out by your graphics card for super smooth gaming and minimum tearing.

The reason I haven't really mentioned FreeSync 2 until now is because AMD themselves haven't ever said what FreeSync 2's specification actually entails. The only concrete thing they've said about FreeSync 2 is that it will offer "twice the perceivable brightness and colour volume of sRGB". Considering sRGB has nothing to do with brightness measurements, that essentially means naff all.

The only thing we really have to go on is the Samsung CHG90. When I tested this earlier in the year, I saw a peak brightness of around 500cd/m2, which sort of tallies with twice the perceivable brightness of a typical monitor, but only a DCI-P3 coverage of around 69% and sRGB coverage of 96% - which is a far cry from what Nvidia are going after with their 1000cd/m2, 90% DCI-P3 G-Sync HDR standard.

This would suggest that FreeSync 2 is more in line with VESA's DisplayHDR 400 standard, which demands 95% sRGB coverage (technically it says Rec.709, but this is also what sRGB is actually based on) and a max brightness of at least 400cd/m2.

However in a recent interview with PC Perspective, AMD said FreeSync 2 wasn't just getting rebranded to FreeSync 2 HDR, but that it's essentially going to be targeting the same specification as VESA DisplayHDR 600 - which is confusing to say the least since the FreeSync 2-branded Samsung CHG90 clearly doesn't meet its 90% DCI-P3 and 600cd/m2 requirements.

After much too-ing and fro-ing over what AMD actually meant in that interview, it would appear that they're settling for something in the middle. That they're not 'lowering the bar' by aligning FreeSync 2 specifically with the DisplayHDR 400 specification, but that a DisplayHDR 400 and FreeSync 2 certified display would be exceeding what's required of just DisplayHDR 400. As a result, a DisplayHDR 600 display would also meet the FreeSync 2 specification and in turn likely exceed whatever the hell FreeSync 2 actually means.

In short, it would appear AMD FreeSync 2 probably requires a maximum brightness of at least 500cd/m2, but the jury's out when it comes to colour gamut coverage.

If you thought that was confusing, though, then hold onto your hats...

What is HDR10 and Dolby Vision?

Another of the most common HDR standards you'll see slapped on TV and display boxes is HDR 10. This has been adopted by almost every HDR TV currently available (as well as the PS4 Pro and Xbox One X) and it's the default standard for Ultra HD Blu-rays as well - handy if you want to make sure your TV can actually take full advantage of that Ultra HD Blu-ray collection you're building.

In display terms, HDR10 includes support for 10-bit colour, the Rec. 2020 colour gamut support and up to 10,000cd/m2 brightness. Again, HDR10 may support all these things, but that doesn't necessarily mean a display can actually do all of them in practice.

Case in point: Dolby Vision. This has support for 12-bit colour (which doesn't really exist yet) in addition to a max brightness of 10,000cd/m2 and Rec.2020 colour gamut support. Like I've said before, I'm sure there will come a time when these standards will start to make a meaningful difference to what you see on your tellyboxes, but right now, you're getting pretty much the same deal either way.

A key thing to also point out is that the main thing differentiating HDR10 and DolbyVision (and now HDR10+, which is an improved version of HDR10) is all to do with how they handle HDR metadata - how films and their respective Blu-ray players talk to TVs in order to interpret their signals. This may be useful if you buy an HDR10 monitor, for instance, with the intention of hooking it up to an HDR10 compatible Blu-ray player, but otherwise, these other standards can largely be left behind in the world of TV buying.

Instead, it looks like the VESA DisplayHDR standards will become the baseline for PC HDR at the moment, with Nvidia's G-Sync HDR and AMD's FreeSync 2 HDR standards operating at the other end of the scale in much the same way as their regular G-Sync and FreeSync technologies do.

What do I need for HDR on PC?

With all this in mind, the best way to experience HDR on PC at the moment is to buy a monitor that hits the following sweetspots:

- A high maximum brightness, ideally 1000cd/m2, but at least 600cd/m2+ if not

- At least 90% coverage of the DCI-P3 colour gamut

- A 10-bit panel (or an 8-bit + 2-bit one)

The problem comes when cheaper monitors say they do HDR, when really all they're hitting is the increased colour gamut coverage. This is fine if all you're after is a more accurate and better-looking screen without spending a huge amount of money - the BenQ EW277HDR (just £180 / $200) is a great example of this. But for those yearning for a true HDR 'wow factor', it's all about that maximum brightness.

At the moment, the only monitors that really hit those requirements (and are actually available to buy, sort of) are the Asus ROG Swift PG27UQ and Acer Predator X27 - and sadly you're going to have to pay through the roof in order to get one, too, with the Asus PG27UQ currently demanding £2300 of your fine English pounds or $2000 of your fine American dollars. The Samsung CHG90 is another decent choice if you're after a super ultrawide monitor as well, but its maximum brightness does let it down slightly. It also still costs an arm and a leg at £1075 / $1000.

You will also need a PC running Windows 10 and a graphics card that can output HDR video signals. Speaking of which, why not head here next?

What graphics card do I need for HDR and what PC games support it?