How Spirit hopes to bring humanity to AI

Better chats with NPCs and humans

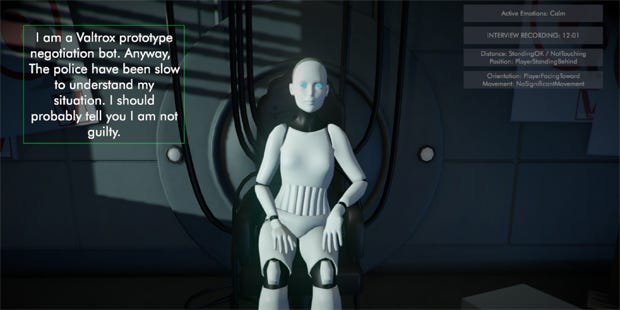

Sitting in a Bath tearoom having just wiped the lemon curd from my fingers I was tasked with interrogating a robot about a murder. The interrogation scene was the GDC demo for Spirit AI [official site] – middleware geared around bringing more expressive characters to gaming as well as building safer and more inclusive online environments. Both hinge around the same set of technologies. They each look at language to understand interactions but one uses that understanding to build meaningful encounters with AI characters and the other uses it to keep an eye on how players are behaving towards one another.

I was sitting with Mitu Khandaker, creative director at Spirit AI. You might remember her work as the developer, The Tiniest Shark, on the game Redshirt or, if you're in academia, she's an assistant arts professor at NYU Game Center and holds a PhD in games and the aesthetics of interactivity. She was watching me play through the demo, using natural language to try to figure out how a man called Martin died and whether the robot is culpable. The demo was by Bossa Studios, makers of Surgeon Simulator, and it gave me a limited amount of time to chat with the robot – me typing and the robot speaking into the tearoom with a female voice in a Scottish accent - before asking for my verdict on her guilt.

So far I had made the robot shy by asking if she was lying about not being able to kill humans and she reiterated the prime directive. As I probed for information the AI character was walking a difficult tightrope, trying to conceal some things in order to stay true to her character but volunteer others so that, as a player, I didn't get frustrated or end up without any threads to tug.

As an example, I asked who did kill Martin and she didn't have that information and so couldn't answer. Instead of just saying "I don't know" she told me that she thinks the murder was related to his work and was almost certainly a human action. This gives me a direction in which to move.

The system isn't perfect at this point but it is intriguing and nuanced. I asked what a woman named Alicia does after the robot mentions her by name, meaning what she does for a living and the robot answers with what Alicia was last doing in terms of her actions in this scenario. It also seems flummoxed by some of my phrasing, such as "why do X get cross with Y?" There's also a moment when I'm trying to pin down motive and the robot seems to think I'm flirting.

But there is also a real sense of information unfurling in a way I'm not used to with games. The robot was generally capable of understanding who I meant when I started using deictic words like "she" or "they" or "there". The AI is generally able to keep track of who I mean in those scenarios which is impressive to me. You can also, because of a few formal errors like missing capital letters at the start of sentences, see that the AI is not following a script but is drawing conversational elements together to create our interaction based on what seems relevant and whether I've triggered particular beats.

At the end of the first playthrough of the demo I'll admit I was none the wiser as to the murder, but I had another round and found out a lot of helpful circumstantial things. Sergei was strong enough to have hit Martin as hard as was needed to kill him and Alicia wasn't, and Sergei couldn't access the room in which Martin was found. Alicia DID have access to that room AND the robot was covered in blood. Oh, and carrying a body wouldn't interfere with the prime directive.

I decided that Alicia and Sergei probably conspired to kill Martin with Sergei doing the actual killing and Alicia providing access to a location Sergei couldn't have reached. The pair then had the robot carry the body from where the murder happened to the location in which it was found.

I feel like I let Angela Lansbury and Hercule Poirot and Columbo down, though. They would have solved the whole thing and not just be relying on circumstantial evidence for their conclusions. I also managed to phrase my answers to the robot at the end of the demo sufficiently unclearly that she thought I was accusing her of murder anyway. I was accusing her of being an accessory after the fact who had had her memory wiped! Justice is difficult.

We only have a short amount of time to take a look at what's happening under the hood for this specific scenario but from what I see and for what Mitu points out it's setting out a framework within which the AI can improvise, acting and reacting dynamically instead of following one of those conversation trees where every branch must be scripted.

Through the system a writer or narrative designer would be able to highlight important pieces of information for the character to convey, what to keep nudging the interaction back towards, story beats to hit and so on rather than following a specific path. That's where the personality of an AI would also begin to be created – via how the character responds and prioritises, their motivations and their agenda.

"We're not trying to recreate humans or anything," says Mitu. "In fact, in a nutshell, with the character engine what we're doing is letting you, if you're a narrative designer or a writer, be creative and craft very specific stories but allow characters to behave dynamically within those stories."

There are obviously other aspects to a character – their body language, their gait, their specific vocabulary, their understanding of personal space and so on but those would be the domain of the developers as normal. In terms of which of those elements impact the interactions, that would also vary from game to game. Within the system you can also do things like define how assertive a character is when they speak. "We can plug in any amount of game state information into our system," says Mitu. "We already do proximity and we're about to do gestures in VR."

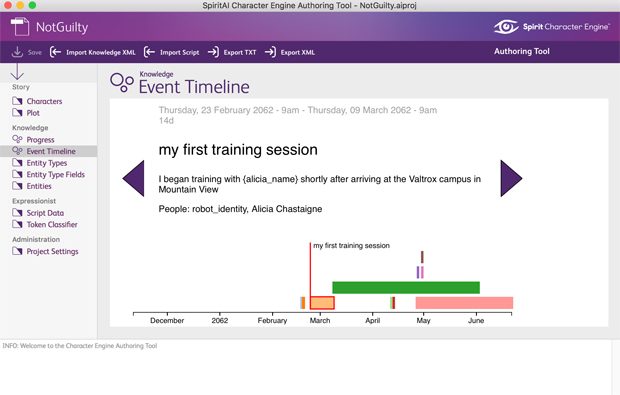

Here you can start to see how the game works with a timeline of events to establish one set of knowledge for the AI.

Here you can start to see how the game works with a timeline of events to establish one set of knowledge for the AI.

In fact, at one point I leaned in towards the robot during my interrogation scene via a keyboard command and she read that as an aggressive action. Mitu tells me that in the room-scale VR version you can stand behind the robot and loom over her which she perceives as threatening.

I start to worry about the impact this newfound awareness could have on my habit of squat-thrusting my way through exposition dialogue or trying to clamber on furniture when some authority figure is telling me about a mission. Imagine a Mass Effect where instead of staring into the middle distance and banging on about some family thing the NPC suddenly stops and says "Excuse me, that's my bed! Stop jumping on it with your grubby boots when I'm telling you a harrowing tale."

Mitu is interested in that too: "One of the things I'm really excited about when this stuff gets out in the wild is players doing the things they've always done and playing round with boundaries but having characters suddenly understand!"

Understanding is at the root of everything Spirit AI's developers are working on. As Mitu said, the goal isn't to create believable humans via AI, it's about players being able to express themselves in a naturalistic way and having the game systems understand them, thus helping create more meaningful interactions. As to the specific nature of those interactions, Mitu says everything is customisable:

"Characters have a script space and a knowledge model which is a repository of information they have about the world and the things they know. We don't assume anything. There are a bunch of defaults we give just so you know how to use the tool but you can customise everything."

There is still a lot of writing involved but the toolset is supposed to make that a bit more manageable and it can also generate similar lines of dialogue to make the process easier. You also need to set up the knowledge models for the different entities in the world.

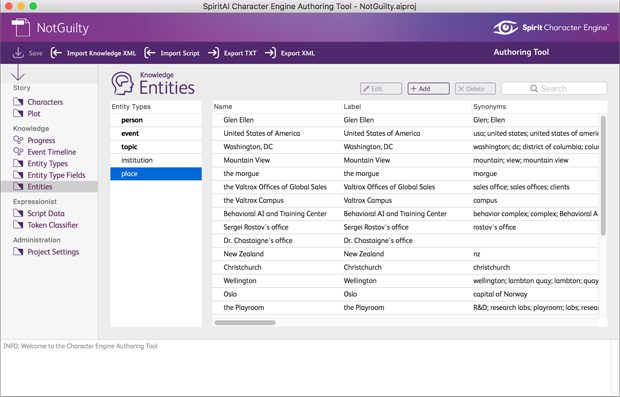

The synonyms column here shows you how the entities start to get rounded out so that the AI can parse meaning in a more natural and flexible way.

The synonyms column here shows you how the entities start to get rounded out so that the AI can parse meaning in a more natural and flexible way.

For example, you can have a company as an entity and the company can have employees which is another type of entity. A person would be a third entity but they can also be an employee – all things you can define and relate. Thus you can create the idea of a company with employees or you could create the idea of a nest of ravenous ants on an alien world made from ice cream. (No, YOU dropped an ice cream on the pavement earlier and are now preoccupied with the ants scaling its heights while you write a feature.)

But alongside the framework for creating AI as game characters the Spirit team are employing that tech to help developers grow non-toxic communities. During her first conversation with Steve Andre (CEO and founder of Spirit AI and formerly of IBM where he worked alongside the team developing Watson, the computer system which won a dedicated edition of the US game show Jeopardy) Mitu asked, if the Spirit AI system could understand language they why not use it to try and tackle harassment? Thus the other main facet of the work at Spirit AI sits Ally.

Ally is a different tool and I'll quote from the official site to summarise what the company's goals are with it:

"Ally underpins the duty of care games studios and social platforms have towards their communities, helping to safeguard against abusive behaviour, grooming and other unwanted activity – all on the basis of listening to the player about what makes them feel safe."

To start dealing with abuse the system has to be able to recognise when it's happening. One traditional method is to scan for key words or phrases – slurs are usually at the top of the list, with other, more specific terms and behaviours following. One of the more memorable attempts at countering abuse recently was in Overwatch where "GG EZ" (an eye-rolly bit of bad manners indicating the opposition didn't offer much of a challenge) gets substituted in chat for lines of Blizzard's choosing like "I'm wrestling with some insecurity issues in my life but thank you all for playing with me."

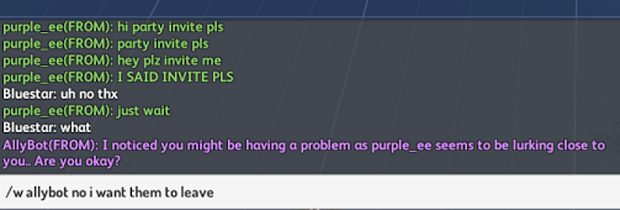

The game pictured here is an off-the-shelf MMO - the experiment was to see if the Ally chatbot could be plugged into it rather than the game content itself.

The game pictured here is an off-the-shelf MMO - the experiment was to see if the Ally chatbot could be plugged into it rather than the game content itself.

Ally isn't looking to do that. For one thing trolls will note which words are banned and then work around them. Instead it's looking for patterns of behaviour. That's not to say it doesn't look at the language being used but it tries to see it in context. By that I mean that it's also looking at whether another person in the chat is expressing discomfort. That could be something like typing "leave me alone", but it can also see when interactions are largely one-way. So if a player is spamming someone else with group invites or unwanted attention and the recipient is trying to ignore it the system can register that. It can then combine that discomfort with instances of sexualised or racial language (or whatever else has been happening.

Something developers can do with this information is use it to activate an NPC interaction. So perhaps a character from the game or just a community moderation bot would then send a message – "I noticed X was happening, are you okay?" From here the player could indicate whether they wanted to block the other player but also whether the system had misread the situation. Perhaps in this context the language and the lack of response were friendly teasing so you could specify that in this instance or with this particular player it was fine. Or even that it just wasn't bothering you. The system can then learn from those responses and apply your preferences in the future.

The developer could also set up Ally so that it talks to the player instigating the harassment to figure out what's going on on that side of the interaction.

The big thing here is the understanding that boundaries aren't fixed and that what is okay in one scenario might not be in another. Intervention is the preferred method here (according to a study Mitu references from Data and Society) in terms of its effectiveness at dealing with online harassment but that usually relies on having a robust moderation and community management team to make those interventions happen.

Spirit are working with some live games at the moment to feed data to the system and make improvements based on what developers and players actually need. Mitu tells me one of those games deals with 1,400 support tickets a day. That requires a mixture of processing power and emotional labour depending on the scenario. What Ally would help to do is take on some of that labour – more than is taken on by a mute button or kicking players into the low priority queue for abandoning games.

The system uses predictive analysis so it would also be able to flag up potential problems even if it doesn't understand them. For example if it picks up a pattern of players showing discomfort it can flag what's causing it so a human moderator can tag in and figure out what's going on. Thus it would theoretically be able to pick up on the workarounds trolls might be using or see when offensive memes flared up even if it didn't know what the content actually meant.

It wouldn't replace a ban hammer, nor is it intended to, but it would offer a broader and more nuanced toolset when dealing with problems in player communities.

On the flip side it could also be set up to pick out when players were being helpful, maybe teaching newbies how to play or keeping a team's morale up. Instead of punishments it could be set up to mete out rewards.

One thing I'd want to keep an eye on is the temptation to silo players off in toxic pools where they only get to play with one another. That might work for some games, or at least contain the problem there but I'd prefer the set-ups to focus on integrating groups of players and incentivising these positive reactions in the hope that it promoted positivity (or at least reduced harassment) elsewhere online. I'd also be interested in the potential to plug the Ally toolset into things like comment sections online.

Mitu touched on that in our interview a little noting the capacity for Ally to help developers set the tone for their community.

In terms of the murder mystery proof of concept demo I was playing, there's enough there and the interactions worked well enough that I'm excited to see what happens next, especially when more elements get added in – animation, detailed art, different scenarios and games. But Ally is the tool I'm really hoping to see develop. I regularly interact with games deemed by many to be the most toxic in PC gaming (although they are also responsible for me meeting lifelong friends). The prospect of systems better set up to deal with the nuances of online harassment AND community building seems like something special.