Why 2016 Will Be A Great Year For PC Gaming Hardware

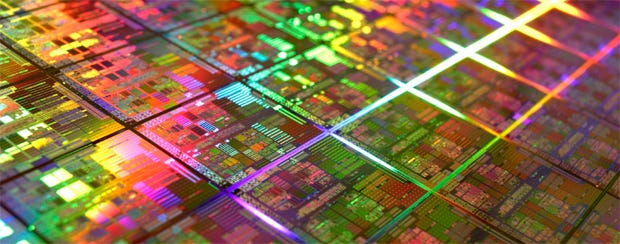

Build it and they will come...

2016 is going to be great for PC gaming hardware. Of that I am virtually certain. Last time around, I explained why the next 12 months in graphics chips will be cause for much rejoicing. That alone is big news when you consider graphics is arguably the single most important hardware item when it comes to progressing PC gaming. This week, I'll tell you why the festivities will also apply to almost every other part of the PC, including CPUs, solid-state drives, screens and more. Cross my heart, hope to die, stick a SATA cable in my eye, 2016 is looking up.

NB: Usual TL;DR drill is at the bottom.

Let's start with the biggie, the CPU or PC processor. It's debatable just how important CPU power is to PC gaming. Some say CPUs are already good enough. Hell, I've even flirted with that notion myself.

Basically, it's all a bit chicken and egg when it comes to CPUs and games. Unlike graphics, the things that bump up against CPU-performance bottlenecks tend to be a bit essential to the game. You can toggle much of the eye candy and retain playability, in other words. But dumb down the AI and the game itself may end up compromised.

Admittedly, quite a bit of CPU load is graphics related, which is why the prospect of DirectX 12 reducing rendering loads is so tantalising. But the overarching point here is that game developers are in the business of actually selling games and thus will only make games that run on existing CPUs. And if they want a big audience, that means games that run on existing mainstream, as opposed to high-end, CPUs.

Long story short, that big, ugly bar at the top gets the chop with DX12

Long story short, that big, ugly bar at the top gets the chop with DX12

Put another way, I'm relying on ye olde build-it-and-they-will-come theory, here. Who knows what wonders game devs would create if everyone's CPUs were suddenly twice as fast? Anyway, there's absolutely no doubt that mainstream / affordable CPU performance has stagnated in the last three or four years. It's barely budged.

We can argue the toss over the reasons for that. Has AMD failed to catch up to its age-old rival? Basically, yes. Has Intel intentionally sandbagged? Almost certainly.

AMD's new Zen CPUs

But here's the good news. By the end of 2016, AMD should have its new Zen-based CPUs on sale. And Zen is an all-new architecture, not a desperate rehash. Out goes the bold but borked modular architecture of the Bulldozer, AMD's main CPU tech since 2011. In comes a more traditional big-core approach that majors on per-core processor performance (you can read a deeper dive into Zen here).

Ultimately, we won't know if AMD has pulled it off with Zen until the chips are out. But AMD is making all the right noises about its design priorities for Zen. Possibly even more important, it was conceived under the stewardship of a guy named Jim Keller. This is the same guy who oversaw arguably AMD's one great design success, the Athlon 64, and later was hot enough property to end up at Apple doing its crucial iPhone chips. Clearly, this guy Keller knows his CPU onions.

Of course, if Zen does tear Intel a new one, we can expect the latter to react. In all likelihood, Intel's spies will discover how well Zen is shaping up well before launch and put the wheels in motion. Personally, I think Intel can probably handle almost anything AMD throws at it. But that's fine. The important thing is that AMD forces Intel to up its game. Then we all get better CPUs, regardless of who we buy them from.

As things stand, to the best of my knowledge Intel's mainstream platform currently looks unexciting for 2016. Its existing Skylake chips remain until near the end of the year before a new family of Kaby Lake processors arrive. But Kaby Lake sticks with the old four-core format and very likely similar speeds and overall CPU performance.

Admittedly, if you're made of money there will be a new $1,000 10-core processor on the high end platform. But it will take a successful Zen launch from AMD to shake up the mainstream. Here's hoping.

Screens, screens, screens

Next up, screens. Lovely, flat, panelly screens. With one possible exception, I'm not expecting any single explosive moment – no 14/16nm GPU die shrink, no AMD Zen bomb. But there will be lots going on.

For starters, I'm hoping AMD can put the final bit of polish on its FreeSync tech, which in theory makes games look smoother and sharper. For now, FreeSync is patchy enough in practice to be pointless. But it's surely fixable and it will likely become a near-standard feature over time, which will be great so long as you have an AMD video card.

Then there's the latest '2.0' version of HDMI becoming the norm. This matters because it allows for 4K at 60Hz refresh and means cheap 4K TVs will be viable as proper PC monitors. OK, you might prefer running at 120Hz plus. But in-game that's academic, because you probably won't be able to generate 120fps+ at 4K resolutions, in any case.

Curved was the big idea in 2015, what about 2016?

Curved was the big idea in 2015, what about 2016?

Already, there are cheap 40-inch 4K TVs with HDMI 2.0 available for under £400 in the UK (or circa $500 in the US, I would guestimate). These screens will not be perfect as monitors. But the important bit is that a 40-inch 4K HDTV has a sensible pixel pitch for a PC monitor. Overall, it's a lot of screen, a lot of pixels, just a lot of desktop real estate for the money.

Meanwhile, existing screen-format favourites like 27-inch 1440p panels will just keep getting more affordable. Ditto high refresh tech and (if FreeSync ups its game) screens with Nvidia's G-Sync technology. And yes, even higher resolution screens like 5K and even 8K will pop up. But honestly, 4K will remain marginal for gaming for a while. So anything more is just silliness.

Quantum what?

Another interesting tech tweak to look out for in 2016 is quantum dot. Already popular in the HDTV market, quantum dot is not a revolutionary panel tech like, say OLED. Instead, it's about making LCD backlights better.

The basics involve materials that absorb certain frequencies of light, convert it and re-emit. The “quantum” bit is because the semiconductor crystal on which it's based leverages a nanoscale effect known as quantum confinement, which in turn involves electron holes, two-dimensional potential wells and the exciton Bohr radius. Obviously.

Some quantum dots in jars, yesterday

Some quantum dots in jars, yesterday

Cut a long story short and you can stick these little suckers in your LCD backlight and improve the quality of light fairly dramatically and for cheap. And that means more accurate and vivid colours. I've not actually seen a quantum dot display as yet, so I cannot comment on quite how dramatic the difference is. But better backlights are very important to image quality so I am hopeful.

OLED already

As for the exception I spoke of earlier, I speak of OLED monitors. There's not space to go into the reasons here, but these things are going to be awesome when they arrive. I'd actually largely given up on the idea of OLED monitors. But 2015 saw the first truly viable OLED HDTVs appear and it seems inevitable the PC market will now follow. I'm just not sure if that will happen in 2016.

Don't forget the memory

Memory tech is the last big area of likely innovation next year, and specifically I'm talking solid-state hard drives or SSDs.

We already know that PCI Express tech combined with some new middleware known as NVMe is tearing up some of the bottlenecks that have been holding back SSDs in recent years. M.2 slots and U.2 sockets are becoming the norm and fully compatible drives are becoming available now, too.

Confusingly, PCI Express drives come in many forms

Confusingly, PCI Express drives come in many forms

That alone is pretty exciting as it will mean a jump in bandwidth from about 550MB/s to roughly 1-2GB, depending on the specifics. But all that could seem positively prosaic if a new tech being developed by Intel and Micron turns out to be everything promised.

Intel's SSD bomb

Known as 3D Xpoint, it's completely different from existing flash memory used in SSDs. Instead of memory cells composed of gates in which electrons are trapped, 3D Xpoint is a resistance-based technology. Each cell stores its bit of data by changing the conductive resistance of the cell material. What's more, individual cells do not require a transistor, allowing for smaller cells and higher density. In other words, more memory for less money.

At the same time, the '3D' bit refers to the stacked or multi-layer aspect of 3D Xpoint. It's basically the same idea as 3D flash NAND and allows for even more memory per chip. But arguably the really exciting bit is that the memory cells are addressable at the individual bit level. See, I thought you'd be impressed.

Ooooooh, the selector bone's connected to the cross point structure bone. Or something

Ooooooh, the selector bone's connected to the cross point structure bone. Or something

In all seriousness, that's a big change from NAND memory where whole blocks of memory, typically 16KB, have to be programmed when storing just a single bit of data. The net result of that clumsy structure is that NAND requires all kinds of read-modify-write nonsense to store data and also needs complex garbage collection algorithms to tidy up the mess that ensues.

Well, you can kiss goodbye to all that with 3D Xpoint. And say hello to stupendous performance. 3D Xpoint is claimed to be up to 1,000 times faster, 10 times denser and 1,000 times more robust than existing flash memory.

In practice, I'm not close to buying the 1,000 times faster bit. But Intel demoed 3D Xpoint recently and the result was seven times faster random access performance than a conventional flash memory drive. That's not 1,000 times faster, but it's still one hell of a leap.

1000x this, 10x that. Believe it when you see it...

1000x this, 10x that. Believe it when you see it...

The slight confusion in all this is that Intel's messaging re Xpoint has been mixed. There's talk of it being used to replace RAM in server systems and noises about it not being intended to replace flash memory. So I'm trying not to get too excited until clarity is achieved.

But 3D Xpoint-based hard drives are apparently due in 2016 and they might just be as big a jump over existing SSDs as they were over magnetic hard drives.

So, exactly what have we learned?

So let's recap. Last time around we had a big jump in chip manufacturing tech allowing for graphics chip perhaps twice as fast. Then there's the possibility of AMD putting a bat up Intel's nightdress. We might just see OLED monitors. And SSD performance could suddenly go interstellar. Not bad for a single year in PC tech, eh?

As for everything else, I'll let Alec and the crew keep you posted on the likes of the Valve controller rollercoaster and virtual reality (VR) technology. I have my doubts re the latter purely because of the need to strap something ungainly to one's head. But I would be very happy to be proved wrong and when you factor in the performance gains coming in 2016, it wouldn't be a bad year at all to really push VR.

Of course, you might say all this is about time after a pretty sucky year or three in graphics, CPUs and storage? And I wouldn't argue. But however you slice it, 2016 is looking pretty awesome.

TL;DR

- AMD's new Zen CPUs will put a bat up Intel's nightdress (hopefully)

- Monitors will get better and cheaper and if OLED arrives it will be awesome

- Intel and Micron's 3D Xpoint tech could make existing SSDs look like slugs