Is Nvidia's New Titan X Uber-GPU Good Enough?

Yup, it's $1,000

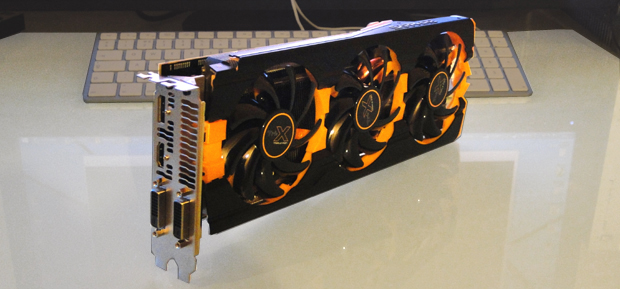

The same. But different. In a good way. That's the take-home from the launch of Nvidia's new Titan X graphics. Yes, it's another $1,000 graphics card and thus priced well beyond relevance for most of us. And yet it's different enough, philosophically, from Nvidia's previous big-dollar Titans to signal something that does matter to all of us. The focus with Titan X has moved back to pure gaming and away from doing other variously worthy and unworthy stuff on GPUs, like folding proteins or, I dunno, surveying for oil.

Generally, I was pretty pleased when I saw the specs for the new GeForce GTX Titan X board. But not actually surprised. I wasn't surprised because it was already known that that the GM200 graphics chip inside Titan X was an eight billion transistor effort made using ye olde 28nm silicon from manufacturer TSMC, if you care about such things.

That's an insane number, the eight billion thing. But it's not actually dramatically bigger than the previous-gen 7.1 billion transistor chip which, in various guises, powered the likes of the original Titan, the Titan Black and indeed the 780Ti.

In other words, eight billion transistors makes for an incredibly complex computer chip. And yet not an order of magnitude more complex, not a generational leap in complexity versus that 7.1 billion figure. In that context, something had to change beyond mere complexity if Titan X was to look sufficiently impressive in the inevitable post-launch benchmarkathon.

That something is twofold. Firstly, Titan X gets the same Maxwell graphics architecture as the likes of the GTX 980, 970 and 960.

It's an architecture that undeniably delivers the best frame-rate-per-transistor ratio in the industry. It is, in short, almost definitely the best graphics tech for PC gaming currently available.

Titan X is jolly fast, but a GTX 970 still makes infinitely more sense

Titan X is jolly fast, but a GTX 970 still makes infinitely more sense

But it still isn't quite enough on its own to ensure Titan X delivered a sufficient performance leap. So what Nvidia has done with GM200 is ditch all the fancy gubbins that boosts general-purpose (ie not graphics rendering) performance that went into the original Titan. Things like big fat registers and super-fast FP64 performance – the sort of stuff the contributes to general compute performance on a graphics chip.

The net result is eight billion transistors of pure gaming grunt. And that's a joy because of what it says about PC gaming. It's healthy enough for Nvidia to produce an absolutely traditional uber GPU that's designed expressly to do one thing really, really well. Play PC games.

While the old Titan was a marvel of engineering, it was never a pure gaming product. Indeed, the GK110 chip that Titan was based on first appeared in one of Nvidia's Tesla boards, which are so compute-centric they don't even bother with video outputs. There will not, I suspect, be a Tesla board based on this new chip. Nvidia will have to cook up something more specific for that.

All of which means that Titan X keeps the ball rolling for gaming graphics in general. Clearly most of us can't even consider buying one, but its very existence will help drag the entire market along that little bit, raise expectations of what is possible for PC graphics and encourage game developers to be that little bit more ambitious.

Oh, and the other thing Titan X does is deliver the closest thing yet to a single graphics card capable of 4K gaming without compromise. It's not actually quite there. But with most games, most of the time, it looks like you get decent frame rates at 4K without needing to switch much of the eye candy off.

I've not personally gone all the way to benchmarking a Titan X. But I've seen a few running and they're nice and quiet under load, and clearly kick out some serious frame rates. Worth the money? Not really, but still a no brainer if you can easily afford one.

AMD's replacement for the 290X is coming soon, but will it be enough?

AMD's replacement for the 290X is coming soon, but will it be enough?

Or two – it's high enders like this that actually make sense in SLI multi-card setups. After all, if SLI is borked with a given game, your fallback is still the fastest single card money can buy. So there's no downside. Well, aside from the minor matter of the extra $1,000.

As for what AMD has in response, the much mooted Radeon R9 390X is due a little later this year, though it's not clear exactly when. How it stacks up against the new Titan X will probably depend on two things. Firstly, is it made with those ancient 28nm transistors, or will it be the first performance graphics chip to move to newer production tech? And will it be yet another minor respin of AMD's existing graphics tech, known as GCN or Graphics Core Next, or has AMD responded to the remarkable efficiency of Nvidia's Maxwell tech?

If rumours that the 390X will be water cooled as standard are true, then it seems the 390X is likely 28nm (not actually AMD's fault) and GCN only slightly revised. And that, I'm afraid, probably won't be good enough. Even with some fancy new stacked memory technology.

Anyway, I would say I'm pleased to have managed to opine at length on the new Titan X without once mentioning its 3,072 shaders or 12GB of graphics memory. But I just have. So I can't.

And for the record, UK pricing for the Titan X, which you can't quite buy yet but can pre-order, kicks off at a little over £850. But then if the precise price makes any difference to you, then you, like me, probably can't afford one.