Why You Don't Need Multiple Graphics Cards

"It just gnaws away at you, that doubt"

Apparently contrary forces, but suddenly complimentary. Are AMD and Nvidia about to become the yin and yang of the PC gaming world? Possibly. Rumour has it graphicsy bits of that DirectX 12 thing that arrives with Windows 10 will allow for asynchronous multi-GPU (graphics processing unit). In other words, you'll be able to use AMD and Nvidia cards in the same rig at the same time to make games run faster. As rumours go, this is pretty spectacular. But it does rather remind me. Multi-GPU is basically a bad idea. Here's why.

To be clear, I'm not saying multi-GPU is entirely devoid of redeeming features. My core position is that it's something that can come in handy in certain circumstances. But it's not something you should prioritise over a good single graphics card for better gaming performance. It is not, in other words, recommended by default.

What, exactly, is multi-GPU?

First, though, what exactly is it? The basic principle is super-simple. Use more than one graphics card to make your games run faster. And that's exactly what AMD claims for its Crossfire multi-GPU tech and Nvidia likewise for SLI. How and, indeed, if and when this actually works is a bit more complicated.

As soon as you have more than one graphics card (or strictly speaking more than one graphics chip, since some multi-GPU solutions are housed on a single card), you have the problem of how to share the rendering load between them. Broadly, there are two options. Split the image itself up or have each card render entire frames but alternate between them.

For option one, known as split-frame rendering, you can slice the image into big slabs, one per GPU. Or you can chop the image up into lots of little tiles and share them out. But most of the time, that's an academic distinction since alternate-frame rendering currently dominates (though this new DX12 lark may change that; more in a bit).

Again, the philosophy behind alternate-frame rendering makes perfect sense. You have all cards rendering frames at the same time, but with a suitable overlap and get double the performance with two cards. Triple with three. And so on.

One frame. Then the other. It's not complicated

One frame. Then the other. It's not complicated

What's more, when you factor in how graphics cards are typically priced, multi-GPU looks even more attractive. High end boards can be double or more the cost of a decent mid-range card but offer perhaps only 50 per cent more performance.

Obviously, there's a whole spectrum of variables in terms of cards, pricing and performance. But for the most part, the price premium at the high end is not matched by the performance advantage. That applies whether you're planning a new rig with box-fresh hardware or you're thinking of adding a second card. So the theory behind multi-GPU is sound.

Does it actually work?

But what of the practice? The problem is that it doesn't necessarily work. I don't mean it doesn't work at all. I mean it doesn't work quite well enough or quite often enough, and I'm not talking about simple peak frame rates. When everything is hooked up and humming, multi-GPU frame rates can come close enough to the per-card boost the basic theory suggests.

Well, they can if we're talking two GPUs. The benefits drop off pretty dramatically beyond two chips. No, the real problem is reliability. Sometimes, multi-GPU doesn't work at all, defaulting your rig back down to single-card performance.

Exactly how often does this happen? Probably not often at all if the context is well-established, mainstream game titles and graphics tech. But with anything really new, be that a game or a GPU, the odds of multi-GPU borkery go up exponentially.

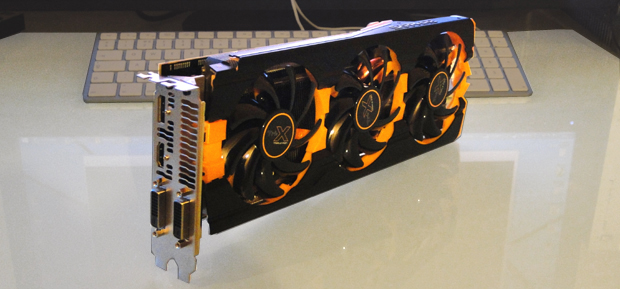

If you're going to go multi-GPU, this is the way to do it. Just two cards

If you're going to go multi-GPU, this is the way to do it. Just two cards

For me, the really frustrating aspect is that it's fundamentally hard to be absolutely sure it's working. Again, for a familiar title, you may have a pretty good idea of what frame rates to expect. But with a new game you'll be unsure and it just gnaws away at you, that doubt. The not always knowing if the multi-GPUness is working.

Then there are the image-quality issues. Multi-GPU can throw up funky things like see-through walls that aren't supposed to be see-through, flashing textures and micro-stuttering. Yes, on both a per-game and global basis this stuff gets addressed over time, in patches or in drivers. But then new games or drivers or GPU architectures come out and stuff is broken again for a bit.

Without fail, every time I've reviewed a multi-GPU solution I have some kind of problem running games. In that sense, I probably have a better overview of the wider realities than someone else who has an SLI or Crossfire rig and finds it runs pretty sweet nearly all the time.

So far, we've been talking about software faults. But add a second card and you are increasing the odds of hardware failure. That's true in terms of the simple maths of having more components. If you had a billion graphics cards, you'd experience constant failures.

It's also true, though to a much lesser extent, in terms of the stress it puts on your system. It loads your power supply, can increase overall operating temps. That kind of thing. In every way, then, multi-GPU makes your rig that little bit dicier.

A single big beast remains best

A single big beast remains best

Who actually uses it?

I'm also far from convinced many people really use multi-GPU. Sadly, as far as I can tell, Steam no longer breaks out stats for multi-GPU, so accurate figures of how many of us use multi-GPU aren't available. But I reckon it's a very small proportion. In fact, I reckon multi-GPU mainly continues to exist as it's handy marketing tool that helps lock us into a given vendor or other and has us buying more expensive hardware with multi-GPU support on the off chance we might use it one day even though the vast majority of us never do.

Like I said above, I'm not damning all multi-GPU tech as foul and useless. If you only play a few games or incline only towards the biggest new releases, you're cutting down the odds of having problems dramatically.

I can also see how grabbing a second hand card on the super-cheap can make sense if your system is otherwise ready to accept it. Lets say you've got an AMD 280 or Nvidia 770. If you could pick a used one up for cheap from a reliable source, that is possibly appealing, and cheaper than upgrading.

But it would have to be very cheap. And the more promiscuous your gaming in terms of chopping and changing titles, the more likely you are to have problems. For almost anyone, I still prefer the spending a bit more on the biggest, beefiest single GPU you can lay your hands on approach and just getting on with enjoying your gaming.

As for whether the latest rumour involving asynchronous multi-GPU will change any of this, I doubt it. OK, the prospect, as mooted, of changing the way multi-GPU works so that as you add cards your available video memory actually goes up sounds very interesting and helps with one of the biggest weak points of using multi-GPU over time, namely that the frame buffers on old cards can be small enough to bork frame rates. But I doubt it will actually work because I doubt AMD or Nvidia will want it to work. And I doubt that even if it does work it will be fundamentally more reliable than single-vendor setups. Quite the opposite.

Shout out below if you disagree: I know multi-GPU certainly has its proponents out there. At the very least, it would be interesting to get a feel for how many of you are happily powering along with multi-GPU of some flavour or other. I feel it's few. Let's find out.