Microsoft unveil VALL-E, their creepy AI that can mimic voices

It only needs 3 seconds of audio

A team of researchers at Microsoft have published a paper on VALL-E, their new AI that can generate realistic impersonations of human speech based on just 3-second samples. It's a concerning development for voice actors, as well as anyone who could be duped into thinking they're on the phone with a relative who desperately needs their card details. I'm usually struck by the impressiveness of new AI tricks before I think about their negative implications, but I found this unsettling from the off.

You can play some of the samples for yourself on Microsoft's github demo, or else watch the video below.

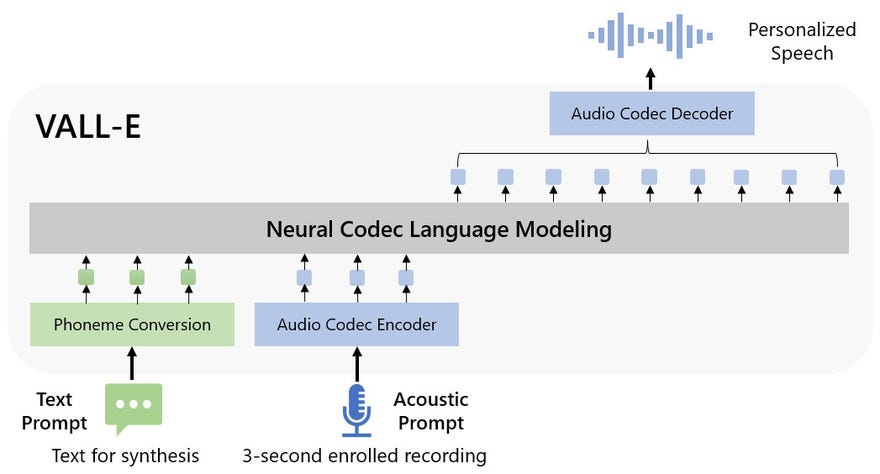

The researchers describe VALL-E as a "neural codec language model", trained on "discrete codes derived from an off-the-shelf neural audio codec model". They also say it's trained on 60 thousand hours of speech, "which is hundreds of times larger than existing systems". AI designed to realistically mimic human speech has been around for a while, but these samples are convincing while other attempts are pretty clearly robots.

As the researchers point out, VALL-E can "preserve the speaker's emotion and acoustic environment" of the prompt. That's impressive, but different to landing on the right tone and emotion in a performance, so it's still a long way from replacing voice actors. I can't see even an advanced version of VALL-E giving performances that outshine those of talented professionals - but companies have a tendency to pursue what's cost effective rather than what's best.

It's a heady time for AI advances, with Chat-GPT now capable of writing essays and correcting coding errors, while the likes of Midjourney and DALL-E spew out images you can easily mistake for the work of human artists. I wish we could play with all these toys in a world where they didn't threaten people's livelihoods.