The DirectX 10 Cri/ysis

Yes, we know we're horribly behind most of the rest of the internet in this (and in fact didn't bother to mention it previously for just that reason), but hell, if we ever want to be the biggest PC gaming website in the world we should probably make the effort. I've compensated for our tardiness by adding several hundred words of bonus ranting to it.

Skip to the end if you can't be bothered with me waffling on about Microsoft conspiracy theories and just want to find out how to make the Crysis demo look way better under hoary old Windows XP. Otherwise, don your finest head-fitting tinfoil and read on.

DirectX 10, then. A colossal balls-up, what? The idiocy began when Microsoft announced that the next iteration of the software that defines the graphical capabilities of Windows games would be available in Vista only. XP may have been over half a decade behind it, but that doesn't mean there's any concrete justification why it can't do the most pertinent parts of DX10 (plenty of non-concrete ones were given). It smacked of artificially creating reasons to make people who otherwise wouldn't bother upgrade to Vista, and hopefully the megabrains working on hacking it out to work in XP will soon accomplish their grand goals, thus throwing egg on the requisite stern corporate faces.

The slim catalogue of DX 10 games thus far has only made the people who did splash out on Vista and a DX10 graphics card feel more ashamed of themselves. Lost Planet? a) A bit shitty b) Didn't look or perform any better in DX10. Bioshock? a) A bit shitty. Awesome, if more traditional than hoped b) The water looks a bit better and the shadowing a little sharper in DX10. Or does it? Oh, my failing eyes. World in Conflict? Nice lighting, actually. Company of Heroes (thanks to a recent patch)? Again, no really significant difference.

At the very least, performance in DX10 vs DX9 for supported games should have been better. It wasn't. Early graphics card drivers have been blamed for that and for the overall slight framerate toll Vista takes on most games, but as NVIDIA and ATI's software becomes more mature as the months go by, it's increasingly hard to claim that Vista's resource hunger and general inefficiency doesn't play a part. It's offensive enough that Microsoft is squeezing the hand that's had a chokehold around PC gaming's neck for so long even harder; it's more offensive still that it hasn't done anyone any good.

Through all this, Crysis remained the light at the end of this sluggish train's tunnel. The first true DX10 game. And lordy-lord did the screenshots look good. £250-£300 on a new graphics card and a new operating system didn't seem too stinging a price to pay for such eyeball-fondling wonder.

Alarm bells really started ringing for me a couple of months ago, with the revelation that physics and day/night cycles wouldn't be available in Crysis multiplayer in DirectX 9 (and thus in XP), meaning DX10 players would have to play on seperate servers to DX9 players if they wanted the full woo-yeah experience.

I could possibly stomach a claim that Crysis' hot tree dismemberment wouldn't be available at all in DX 9, but just the multiplayer? I'm flailing around in the dark to a certain extent on the tech reasoning, but it seems to me that such effects are handled by the client, not the server. Players' hardware shouldn't affect what the server's capable of to that extent, especially when we now know the effects in question are available in DX9 Crysis singleplayer. There's every chance there really is a genuine technical explanation why this has to be the case, but if there is it hasn't been well-expressed. [Taps tinfoil hat knowingly]. I call shenanigans. If anything, it sounds like a cynical excuse for DX9/DX10 segregation online, those who haven't paid the Vista tithe contrivedly rendered desperate to ascend to tree-shattering, sunset-swathed DX10 valhalla.

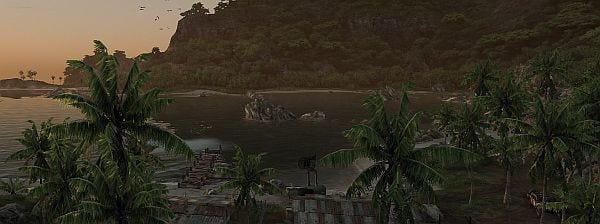

And now this. While Crysis in Vista and with a DirectX 10 graphics card is indeed rendered in the supposedly faster-performing DX10, its maximum visual wow is not unavailable to XP. DX9 can do most of what DX10 can, just (theoretically) not as efficiently. It's just that Crytek, or EA, or Microsoft, or some jiffy-bag-full-of-money agreement betwixt all three, have artificially locked out the 'Very High' graphical detail setting in XP. A simple config file tweak reactivates it, and Crysis can then look as beautiful as it does in DX10 with maximum detail in DX9.

Here's how, courtesy of a clever Actiontrip forumite.

Browse to C:\Program Files\Electronic Arts\Crytek\Crysis SP Demo\Game\Config\CVarGroups, and open one of the .cfg files therein in notepad or whatever (make backups first). The first paragraph is the Vista-exclusive Very High settings; the last paragraph is High. So, copy the contents of the first paragraph over the contents of the last paragraph. Repeat this for all the cfg files, and then load up the demo. Selecting High settings will in fact activate Very High. If you're confused, there's more help in this thread.

Of course, you'll still need a monster rig to reach anything like a playable framerate, though some folk are reporting maxed-out Crysis actually runs faster in XP than Vista.

More importantly, it reveals that there's certain skullduggery at play in terms of Crysis and DirectX10. It may be pretty, but it's not the major technological sea-change we've been led to believe it might be. Now we know for sure that DX10-level visuals do not require DX10 and Vista. Can anything ever convince us that's the case again? I patiently await the full version of Crysis being hacked to allow physics and day/night cycles in DX9 multiplayer.

(For the record, I run Vista as my main operating system and play the vast majority of my games on it. Some of my best friends are Vistas. Sure, it often annoys me, but that's been true of any version of Windows. I have no great problem with the OS, though I do with some of the marketing decisions behind it).