Smarter Than I: How Spirit Ally aims to tackle toxicity

Into the swamp

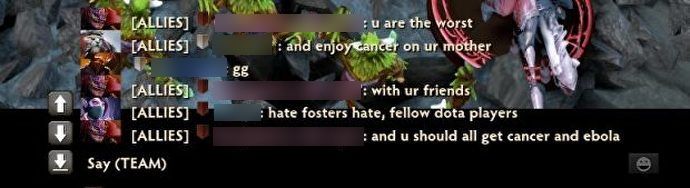

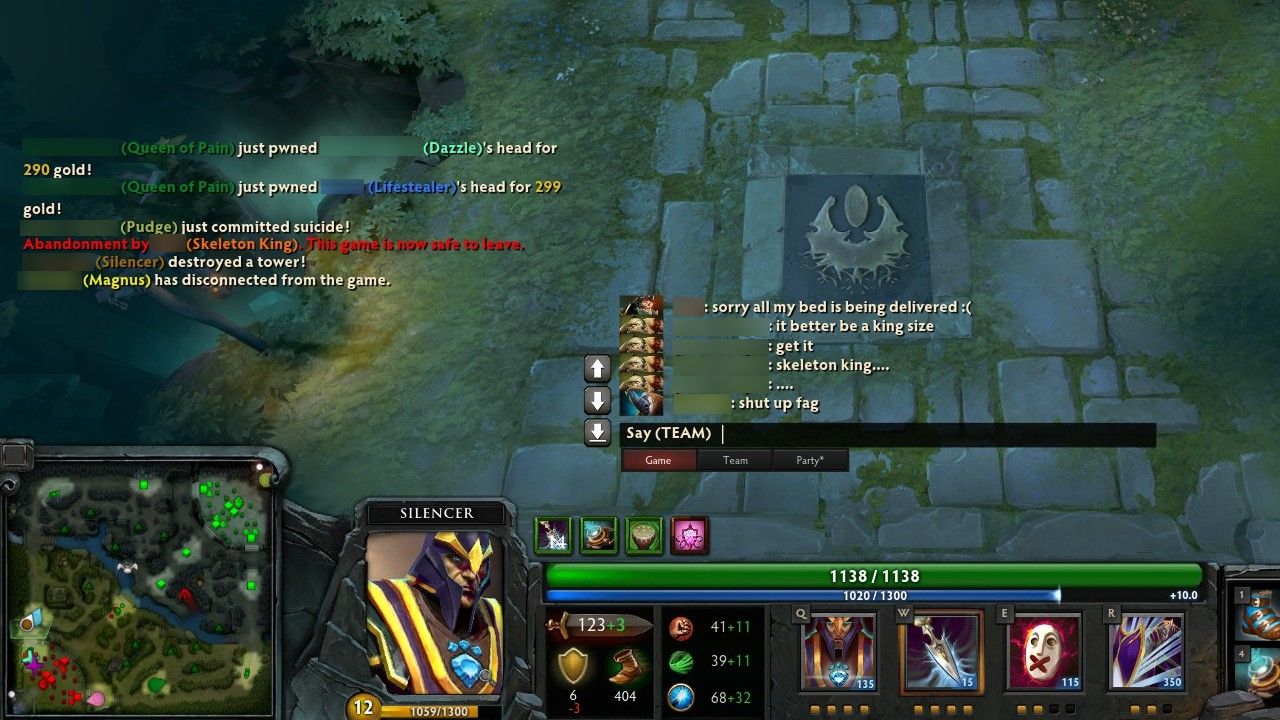

Some of the screenshots in this article contain examples of hate speech being used in in-game chat, including racism, antisemitism and homophobia.

The worst part of games are the asshats who play them. I don't mean you, I'm sure you're lovely. I mean the racists who swamped my Mordhau server last night with anti-semitic gibberish. I mean the Dota players who've wished cancer on my mother, and the Overwatch teammates who've wished me worse. Toxicity in multiplayer games is near universal, and designers have a responsibility to manage their communities. Fortunately, Spirit AI is developing a tool that might help.

Spirit Ally is an AI that (in its current form) assists human moderators in "creating safe, welcoming communities". If Spirit AI can expand the current tool's capabilities, its impact could be profound - but my chat with Emily Short, Spirit AI's chief product officer, didn't quite go as I expected.

At the moment, Spirit Ally primarily does analysis. It sifts through data, then brings key information to the attention of moderators.

"Say you're a community moderator, you're bringing up a dashboard to look at how your community has been over the recent past." Short began. "What Ally is doing in that case is looking at how people have interacted in your space and prioritising. If I've got a case where Ally has identified that this person has had fifteen different interactions, all of which are flagged, that person is going to rise to the top of the list".

That's useful, but hardly groundbreaking. When I got in touch with Short, I was under the impression that Ally was capable of directly contacting players. Their website does rather make that out to be the case, primarily through what turns out to be a mock-up of Ally asking someone in-game if another player is bothering them.

"I should clarify that there is not a live use case at the moment where Ally is driving a live interaction with players", said Short. "This is something we're prototyping, something we're exploring with clients. There is no sample out there of 'here's a moment in a game you could be playing where Ally is checking in with you'".

Spirit's website also boats of how Ally can "enhance the reach of moderators by automating the detection, evaluation and response to problems" within a community. Ally is capable of reading chat in real time, but not of talking to players. Short told me Ally's primarily role is to detect problematic behaviour, and respond in ways specified differently by different clients. It might trigger "an automated response of some sort", such as muting the offending individual. Or it might contact a human moderator, and hope they're not too busy to intervene.

That hung a question mark over the rest of our conversation, but it seems likely that (sooner or later) Ally will be able to analyse interactions with players, then intervene however Spirit's clients see fit. Short was keen to stress how Ally can be customised to the needs of different clients. "There are lots of different ways we could deploy the system we're building, a lot of different rules we could apply", she told me, "depending on what the appropriate context is". Short then pointed towards other services that simply remove swearing or sexual content, and highlighted how either might potentially be ok.

"On some platforms it's actually fine for that material to appear if it's consensual and everyone participating is an adult - they don't mind. What they would prefer is to be able to moderate out different kinds of toxicity, harassment or bullying."

I asked how Spirit AI have dealt with situations where clients have requested a service they don't believe will positively benefit their community. "We have declined to work with clients in a couple of specific cases. If their aim is to remove barriers towards somebody engaging with their product in a way that might be the result of a gambling addiction, we've just overtly said we don't want to solve that problem for you. We don't want to go there."

"There are also cases where clients have made what are basically requests where we can understand the community or business motivation, but we actually think are naively formed and likely to have an ethically bad result. Sometimes that's around what they want to look for, sometimes it's around how they want to monitor the behaviour of their moderators, or what they want to make available to their moderators."

I've no doubt that Short and her team are approaching these issues responsibly - which is vital, considering they are many and poignant. A good illustration of that is how Short went on to talk about her concerns regarding human moderators.

"You want the moderators to have enough information to take effective action, but at the same time you don't want moderators to be in a position where [they can] for their own personal reasons spy on members of that community. So if people are talking about highly personal information, GDPR protected information, do we want moderators to be able to view that kind of thing, and can we black some of that out? What we don't want is for a moderation team to be empowered to use the tool to find out things that they could use to financially cheat the customers, or otherwise abuse their customer base."

Much of our chat centred around concerns like these. Short seemed genuinely, impressively, driven to help transform online communities into safe and comfortable places for all involved. We talked about the way moderators can be subjected to heinous content in copious quantities, especially with social media, and how a future version of Ally might be able to help. We talked about how Ally might be tailored to an individual's needs, perhaps offering them the ability to opt out of seeing messages of a particular nature in chat. We spoke about how nuance is often hard to communicate in text, and how different community norms require different approaches.

That's where Short lost me. Most of my experiences with online toxicity haven’t been nuanced. They have been unequivocally awful. When I think about how I want online communities to improve, I’m not thinking about people violating social rules that stem from a particular context. I'm thinking about behaviour that is unacceptable in any context. I’m thinking about bad actors whose behaviour needs to be changed, or the offenders flat-out removed.

Short didn't deny that abusive behaviour was a problem, but she was also unwilling to say it was the main problem. Our conversation repeatedly turned back to how interactions like that take place on one end of a spectrum, and how she and her team want to approach the issue more broadly.

"You asked about messaging abusers, and certainly people are abusive - that is definitely a use case. But I think there's a whole spectrum, from full on trolls who come in with the intention of causing bad feeling and harm, to people who maybe are just interacting in a community that has different norms to what they're used to online. And their intentions are not necessarily bad, but they just are not appropriately behaving in that space. So we're really interested in using Ally to help communicate that information to people, and in that kind of case where we're talking to someone who is behaving inappropriately, that might be a warning, or that might be a suggestion along the lines of 'in this kind of space, that sort of thing is not ok'."

Short's ideas about improving online communities radically differ from mine. It's not that I disagreed with anything she said, and I'm thrilled that Spirit AI are approaching the issue in such a thoughtful and comprehensively responsible way. But I do disagree with Short's emphasis. The main people responsible for making me miserable mid-Dota match aren't those that need to be gently reminded that their behaviour might be upsetting. They're people who know what they're doing and don't, ultimately, care.

"Those people exist, and you're right, it's likely we're not going to persuade them via soft methods to stop", Short replied. "They have their own motivations, and so that's something where we need to help companies either ban those people or have some sort of method of excluding them from communal participation in a way that will end their ability to do harm. But there are also people who either don't understand the community norms, or who have for the time being forgotten the faceless individuals they're dealing with are human - I think that is also a pretty significant part of the mix."

We did agree, though, that the best scenario would be one where "there are fewer instances of bannable behaviour to start with". This is where I believe Ally's potential, or tools like Ally, truly lies - and also where we start to step beyond the scope of this article. My thoughts centre around Riot's research into how to shape player behaviour. Most interestingly, they found that altering the colour of a loading screen tip - "Players who cooperate with their teammates win X% of games" - significantly changed the percentage of abuse recorded in that game.

Caveats abound. Exactly how Riot classified abuse is unclear, and they have an obvious incentive to exaggerate the significance of their work. This still raises the possibility that there might be a myriad of subtle effects that could be leveraged to alter an abusive individual's behaviour. Might it be possible for a tool like Ally to test similar messages in thousands of different permutations, and arrive at context-tailored results, honed to scarily effective levels of persuasion?

When I put that to Short, she didn't dismiss the possibility. I also asked her if she saw a problem with manipulating people in that way. After pausing, laughing, then telling me that was probably the most loaded question she'd ever been asked in an interview, she replied in earnest.

"We attend, as a company, to the point that AI can shift people's experiences in a way that they're not really aware of and haven't had a chance to buy into. That's certainly something to think about. It's not a power to be applied lightly. I come back to the idea of letting people as individuals make some of these decisions for themselves, rather than putting everything in the hands of corporations. So alongside you getting to pick whether [certain kinds of chat message] come your way, there's also a level of transparency about what the system's doing. It's not some kind of secret Cambridge Analytica situation, where stuff's being shown in your feed and you thought it was objective but actually it's not.

"So that's a piece of it, from an ethical perspective. I also think there's a big difference between 'I've made a close study via manipulating tonnes and tonnes of data and I've worked out the best possible way to make people buy shoes or vote for Trump' versus 'we've looked into what is helping people understand how to not give offence to other people.' I genuinely think there is a difference between those two objectives! I'm much less morally concerned about 'oh, I put this lettering in the colour blue and therefore that was a more effective generator of empathy than if I'd displayed that lettering in red'. That seems comparatively non-evil, to me!"

A thought that's stuck with me, ever since I read it in a book or an article I've otherwise forgotten, is that one of the most important questions society needs to confront is "what forms of manipulation do we consider acceptable?" This is a context where, like Short, I'd be happy to see what an AI can do - especially an AI developed by a company with the ethos Short embodies.