Nvidia RTX 2080Ti vs GTX 1080Ti: Clash of the 4K graphics cards

You've been framed

Last week, after much hype and excitement, Nvidia's GeForce RTX 2080 graphics card was finally unleashed on the world. Today, it's the turn of its beefed-up big brother, the RTX 2080Ti, whose release was delayed by a week for reasons lost to the bowels of Nvidia's marketing department. As you can see from my Nvidia GeForce RTX 2080Ti review, this is hands down the best graphics card for 4K I've ever seen, and that's all down to the monstrous power of Nvidia's new Turing GPU. But how much of a leap does it represent over its immediate predecessor, Nvidia's GeForce GTX 1080Ti? To the graphs!

Much like my RTX 2080 vs GTX 1080 article, the figures you see below are based solely on each card's raw performance. We're still waiting for developers to patch in support for all of the best RTX features such as DLSS (deep learning super sampling) and ray-tracing, so the numbers you'll find here should be broadly representative of what you'll get if you were to stick one in your PC today. I'll re-test the appropriate games as and when these features start arriving, but for now, this is what we've got to work with.

A small note before we begin. I ran my tests with an Intel Core i5-8600K and 16GB of RAM in my test PC, and in some cases the former was clearly holding things back at lower resolutions, as results were either worse or only a minor improvement on what the GTX 1080Ti did. As I mentioned in both RTX reviews, I'll be retesting everything with a better processor soon to see if that makes any difference, so don't be alarmed if some results look a little odd.

I should also point out that the games I've picked here are slightly different to the ones I included in my RTX 2080 vs GTX 1080 comparison article, as I haven't been able to get another GTX 1080Ti back in for testing since I did my Final Fantasy XV graphics performance round-up (curse those pesky cryptominers) with Zotac's GTX 1080Ti Mini.

As such, more recent games such as Shadow of the Tomb Raider and Monster Hunter: World are off the menu at the moment, so I've swapped those out for Total War: Warhammer II and Rise of the Tomb Raider instead. Otherwise, we've still got Assassin's Creed Origins, Middle-earth: Shadow of War and Final Fantasy XV, and they've all been tested at 3840x2160 (4K), 2560x1440 and 1920x1080 on max settings using their own internal benchmarks, except FFXV where I've worked out the average frame rate from my in-game testing results.

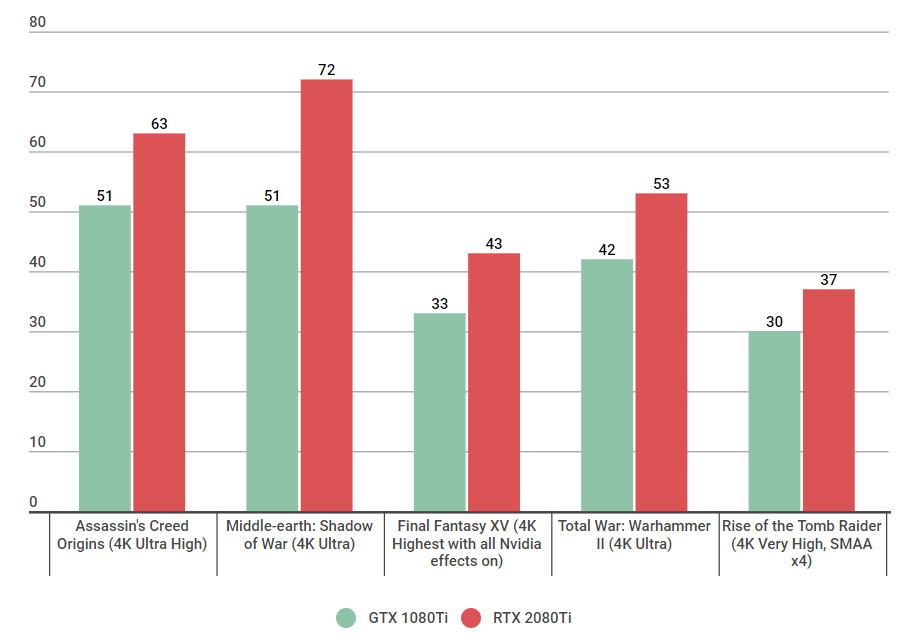

At 4K, the leap in performance at maximum settings is quite substantial. 10-20fps might not sound like much, but when the GTX 1080Ti was generally hoovering around the largely playable but also slightly janky zone of 30-40fps in most cases, those dozen extra frames or so can make a surprising difference to how good something feels to play. Indeed, the RTX 2080Ti pushed those figures much closer to that elusive 60fps across our five test games - except in Tomb Raider, of course, where that dastardly SMAA x4 anti-aliasing is still causing chaos even now many years after its initial release. You can drop that down to FXAA or SMAA x2 in both cases and get much better results with both cards, but I chose the top SMAA x4 setting in this case simply to illustrate what cards are capable of when everything is maxed out.

That's not to say the GTX 1080Ti isn't a good option for 4K gaming on a single GPU, of course, as dropping all of these games down to High will still net you a highly respectable 50-60fps. However, if you want a single card setup that provides absolute 4K perfection with zero compromises, the RTX 2080Ti is currently the only way you're going to get it.

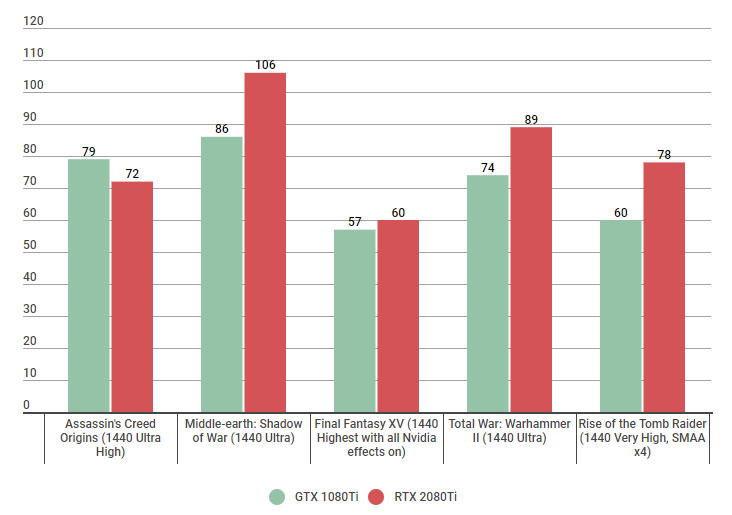

Moving down to 2560x1440, the GTX 1080Ti already offers more than enough power for gaming at 60fps at this resolution, so you'll only see the differences illustrated above if you've a monitor with a high refresh rate. On the whole, though, you're probably looking at an increase of 15-20fps with the RTX 2080Ti, unless you're playing Assassin's Creed Origins where it actually performed worse than its GTX predecessor.

I suspect this probably has something to do with my Core i5 acting as some kind of bottleneck here, as you'll see the same thing happened again at 1080p below. Then again, Final Fantasy XV didn't show much of an increase either, its taxing VXAO Nvidia setting keeping things steady around the 60fps for both cards.

Still, CPU issues aside, you'll get plenty out of each card at 1440p, especially if you've got a high refresh rate monitor. Whether you can actually tell the difference between 75fps and 90fps, however, is another matter entirely. Personally, I don't think my eyes are good enough to notice an extra 15-odd frames at this kind of speed, but those with superior sight balls may feel differently.

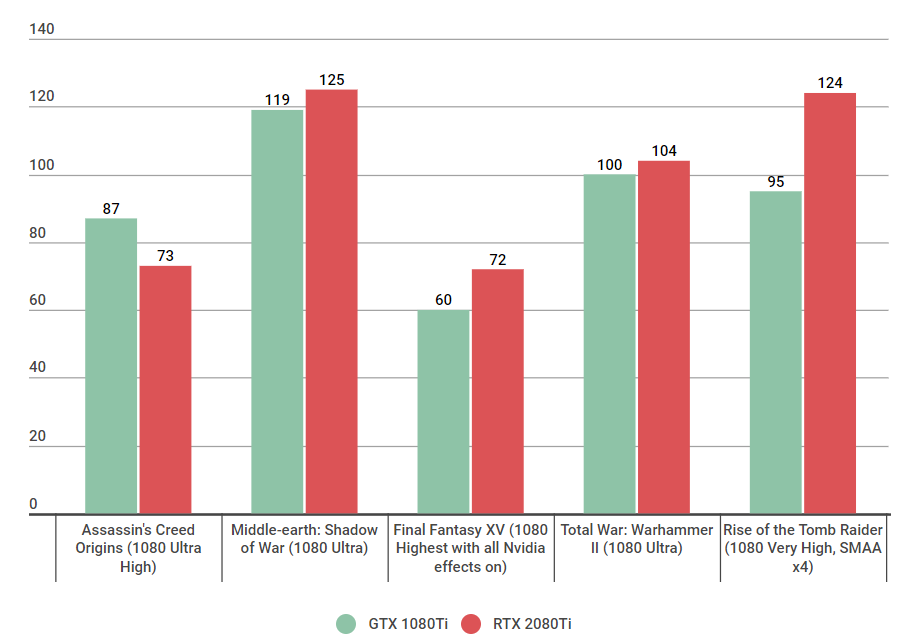

Meanwhile, at 1920x1080, we're once again in the realms of high refresh rate monitor land here, albeit with even more severe CPU bottlenecking than we had at 1440p. Paired with a Core i5, the RTX 2080Ti often only showed marginal gains at this resolution, pushing frame rates an extra 5-10fps in Shadow of War, Final Fantasy XV and Total War, and once again showing no improvement at all in Assassin's Creed.

Only Rise of the Tomb Raider showed the kind of performance increase I was expecting from the RTX 2080Ti, offering a boost of around 30fps. However, until I re-test with a better CPU, it's hard to say whether you'll see similar leaps elsewhere. Either way, you're probably not buying either of these cards for playing games at 1920x1080 (and if you are, you're mad as the Nvidia GeForce GTX 1050Ti or GTX 1060 will do everything you want at 1080p for a fraction of the cost), so their achievements at this resolution are, I'd say, relatively unimportant.

Ultimately, the GTX 1080Ti remains an excellent graphics card for those gaming at 2560x1440 and 4K, and as long as you're happy with High settings at 4K as opposed to Ultra, then you might as well save yourself several hundreds of pounds / dollars and stick with one of these instead of shelling out for the £1049 / $1150 RTX 2080Ti. The RTX makes a bit more sense if you've got a high refresh rate monitor, as it will obviously be even faster than the GTX 1080Ti on High at 4K, but right now I don't think it's worth all the extra money involved.

There is, of course, one crucial element missing from these results, and that's all of Nvidia's special performance-boosting Turing bits and bobs. The key one is DLSS, which uses AI to help out with anti-aliasing and smoothing out all those surprisingly taxing sharp edges so the GPU can focus on pumping out more frames. Admittedly, adding in DLSS results probably won't make a huge amount of difference to this particular set of games here, as right now the only confirmed DLSS game out of the five is Final Fantasy XV. Still, I'll be interested to see how much of a difference it actually makes to in-game frame rates, and I'll update my results accordingly once I've been able to test it properly.

Personally, if you want to buy a 4K-capable graphics card right now, I'd still err on the side of the GTX 1080Ti. It's more than enough to handle most games at High settings at 60fps, and it's nowhere near as financially crippling as Nvidia's shiny new RTX card. Perfectionists may feel differently, but in terms of value for money, the GTX 1080Ti is still a tough act to beat.